09 Jun How Do We Measure Collaboration?

The challenges and benefits of incorporating hard-to-quantify questions in monitoring, evaluation & learning approaches

Katy Gorentz is the monitoring and evaluation manager for HRH2030. Juan Barco leads the HRH2030 activity in Colombia.

To harness the potential of the health workforce as a driver of better health outcomes, job creation, and economic growth, health systems must be driven by data. At the USAID Human Resources for Health in 2030 Program (HRH2030), part of our role in building a strong health workforce is to work with local stakeholders to develop the tools they need to gather, analyze, and use data for decision making. But in our work, we’ve come across concepts that are, at first glance, tough to quantify. How do we quantify and track broad concepts like coordination and organizational maturity? How do we make that information actionable?

In Colombia, we’ve set out to find solutions to these questions in partnership with the Colombian Institute of Family Welfare (Instituto Colombiano de Bienestar Familiar, ICBF), which provides comprehensive prevention, protection, and well-being services for vulnerable children, adolescents, and families across the country. Together, we want to learn what an optimized ICBF looks like – how it coordinates activities, provides services, and addresses institutional challenges – and help make that vision a reality. In this blog, we’re sharing our experience of grappling with these hard-to-quantify concepts and embracing USAID’s Collaborating, Learning, and Adapting (CLA) practices to gather useful data to drive our work with ICBF.

Tracking broad concepts like coordination

ICBF works through a decentralized approach with multiple levels of leadership, from national level strategies down to local case management “protection teams.” For this structure to work effectively, ICBF team members must coordinate well internally and have strong organizational processes. Together, we wanted to design a comprehensive assessment approach that would measure these concepts so that we could determine areas for strengthening.

To understand how to quantify something as complex as coordination, we embraced relational coordination theory, which recognizes the way that relationships and communication are intertwined and affect how an organization works. The Relational Coordination Research Collaborative of Brandeis University has created a fully validated measurement tool that breaks big theoretical coordination concepts into real-life, tangible questions about the way that members of an organization interact with their colleagues. This methodology really spoke to our needs, as it broke down large, complex concepts into survey questions that are easy for participants to answer.

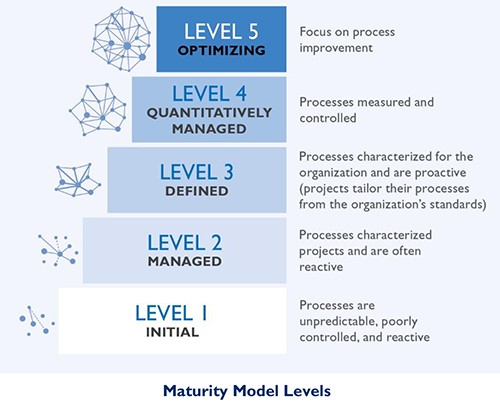

We have used similar approaches to break down big concepts at the regional level, as well as in our work on another HRH2030 activity, Capacity Building for Malaria. In these areas, we have used the Carnegie Mellon Capability Maturity Model approach to break down complex organizational processes into tangible elements that are easier to quantify (see image at right). For example, by deconstructing ICBF’s complex training systems into separate, discrete elements, we realized that ICBF staff frequently perceive training and supervision processes as “checking off a box” and that trainings could be made much more effective by envisioning and tracking how trainings impact service provider behavior.

We have used similar approaches to break down big concepts at the regional level, as well as in our work on another HRH2030 activity, Capacity Building for Malaria. In these areas, we have used the Carnegie Mellon Capability Maturity Model approach to break down complex organizational processes into tangible elements that are easier to quantify (see image at right). For example, by deconstructing ICBF’s complex training systems into separate, discrete elements, we realized that ICBF staff frequently perceive training and supervision processes as “checking off a box” and that trainings could be made much more effective by envisioning and tracking how trainings impact service provider behavior.

Participatory development to promote stakeholder engagement

We worked extensively with ICBF stakeholders to translate these theories and models into an assessment framework that would inform our work together. Through participatory development of the assessment design, we were able to roll out surveys to staff at the national, regional, and municipality level who, for the first time, were able to quantify how well they were coordinating with others in the organization and identify areas that needed strengthening.

First, we worked with ICBF stakeholders to understand ICBF: their main work processes, the different ways that offices coordinate with each other, and the levels of coordination from the national level down to the protection teams. We worked with many different stakeholders within ICBF, and specifically with the Planning and Control Directorate, the Protection Directorate, and regional directorates to develop and roll out the assessment materials in a participatory way. Once the data was in, the HRH2030 Colombia technical team shared and analyzed the results in group workshops with ICBF stakeholders so that we could identify challenges in the results together. Then, using a SMART approach, we came to a shared vision and action plans for how to proceed. This extensively collaborative process was built to ensure that ICBF staff and leaders felt recognized by and invested in the assessment process.

Gaining organizational buy-in was also important for sustainability of these learning approaches. At the regional level, we involved two directorates, the Regional Advisor Office (Oficina Asesora Regional, OAR) and the Organizational Development Subdirectorate (Subdirección de Mejoramiento Organizacional, SMO). The OAR supervises performance of 33 regional directorates around the country; however, it previously had no tool to measure progress or identify areas for improvement with the regional directorates. Similarly, the SMO, which is responsible for incorporating tools to improve ICBF’s internal collaboration, has recognized the potential of the maturity model approach to improve regional performance and has begun a validation process to align the model with other institutional tools. By engaging these stakeholders early on, we were able to ensure that our data collection and data use processes aligned with ICBF’s strategic goals.

Protecting sensitive information while gathering insights and data

In addition to ensuring organizational buy-in, it was crucial for us to gather insights from individual ICBF staff. As we implemented the assessment approach, we found that it was incredibly important to clarify that the assessments would measure processes not persons, and that individual responses would be protected. For example, when we began data collection in Maicao, participants started by saying “everything is ok” because they thought the baseline would measure individual performance. Once our technical team shared that the models were to be used to find organizational gaps and make improvements that could benefit their work, participants more openly shared their perspectives. We were careful in the assessment design and implementation to ensure that survey responses could not be linked back to individual participants.

Ensuring resources to conduct formative evaluation and CLA

HRH2030 recognized the need for collaborative monitoring, evaluation, and learning (MEL) early on, and incorporated these assessments, collaborative workshops, and data analysis processes into our workplan and budget. We identified tasks that fit within technical staff’s scopes so that MEL approaches were part of everyone’s responsibility. In this way, we were able to integrate these approaches into technical activities, rather than having them siloed in the MEL plan.

At the ICBF level, we recognized that in general, public entities frequently don’t have sufficient resources to conduct extensive methodology trainings and data collection activities. HRH2030 worked with ICBF to share efforts as much as possible during the baseline assessments, building the skills of ICBF staff along the way so that they can use these models in the future. Further, by supporting ICBF to incorporate these approaches into their own organizational guidelines, we have planned for the sustainability of these models as they are now incorporated in ICBF’s budgeting processes.

Promoting sustainable data use for learning

All of this work was informed by USAID CLA practices, including collaboration and robust formative evaluation that allowed us to build a technical evidence base and a strong, trusting relationship with ICBF. To make the most of this opportunity, we continued embracing CLA approaches by identifying formal opportunities to reflect on the data and use it for decision-making together with our ICBF colleagues. We started out with focus groups to analyze the data collection results together and collaboratively develop action plans, including identifying focal points who would be responsible for pushing activities along. We are also incorporating pause and reflect sessions to look back on the results of the baseline assessment, discuss how far we’ve come, and identify what work still needs to be done.

In these ways, ICBF and HRH2030 have used data to achieve new insights about Colombia’s social services workforce. Along the way, we are building ICBF capacity for data collection and analysis so that these learning processes are fully and sustainably incorporated in ICBF’s work. We believe ICBF’s commitment to answering tough questions and using data for decision-making will strengthen social services to benefit the children, adolescents, and families of Colombia.

***

Resources:

CLA: Our work in Colombia draws heavily from the USAID CLA Framework, guidance for facilitating Pause and Reflect activities, and examples from other international development programs who have submitted case studies to the CLA Case Competition.

Measuring coordination: We are members of the Brandeis University Relational Coordination Research Collaborative (RCRC), which provides resources for practitioners and researchers to use relational coordination theory to transform their organizations.

Measuring organizational maturity: Useful examples of maturity models for international development practitioners include the USAID CLA Maturity Spectrum (in more languages here) and the CBM CMM Framework.

Participatory evaluation: This resource from Better Evaluation is a useful introduction on the considerations of designing a participatory evaluation framework.

Photo: ICBF technical staff based in the La Guajira region, voting on organizational maturity levels.